Data-driven marketing

In the past, in order to be a good marketer, it was expected that you had sixth sense. There was no obvious reason to predict who would rise to the Olympus of marketing. Now, with data driven marketing, we have moved from an art to a science.

Cookie-based destinations

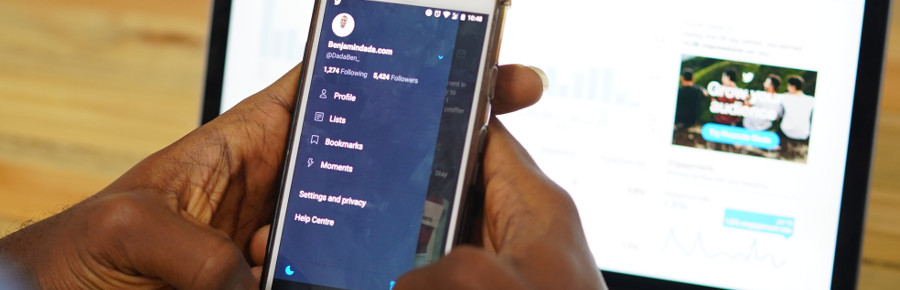

As it happens very often, when I was about to write about cookie-based destinations, I realised that I had not covered the basics. So, I decided to postpone this post and write another one on how a DMP works at very high level. Now I can finally write about one of Audience Manager features.

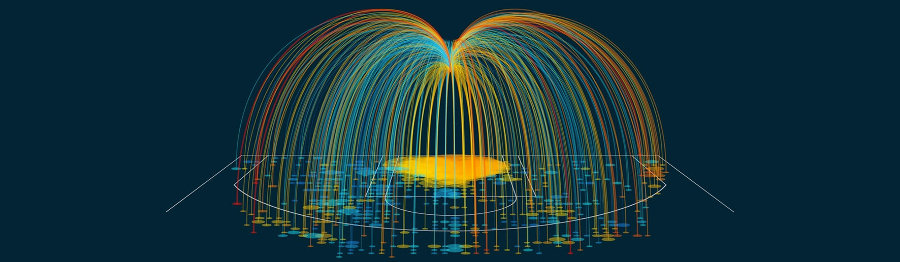

DMPs: data in, data out

I still remember vividly how, after coming back from my summer holidays in 2014, my manager told me to book a flight to NYC to get trained on Adobe Audience Manager (AAM). The training was a hit and miss type: I understood some concepts, while others were totally alien to me. It did not help the fact that, after this training, I spent 6 months without working on AAM projects.

Bots and the Adobe Experience Cloud

Now that you are familiar with what a bot it is, I am going to explain how the Adobe Experience Cloud (AEC) interacts with bots. However, if you landed on this page directly and do not know what a bot is, I suggest you first read my previous post on bots, crawlers and spiders.

Bots, crawlers & spiders

If you have a website, sooner or later it is going to be found by bots. There is no way you can prevent this from happening, so you need to be ready to deal with them. This is the first of a 2-part series on this topic.

More on siloed teams

When I wrote about siloed teams, I left a lot of ideas out. This is a follow-up post, expanding on the same topic. If you have landed on this page for the first time, I suggest you first read my previous post and then come back here.

Definition of Campaign

Like all guilds, digital marketeers have their own jargon. People outside the group may find it difficult to understand what certain words mean. One such word is campaign. Different people, teams or companies in digital marketing use it in different ways, often excluding others’ meaning.

Big G lead designer story

Many years ago I read a story about the resignation of Google’s lead designer. He wrote a bitter post, where he explained why he did it. I recommend that you read it before you proceed with my post. Initially, it was just an curious story for me, but now I see profound implications.

Introduction to consumer journeys

The concept of consumer journeys is becoming one of the key techniques to digital marketing. It is an innovative way of creating campaigns, which requires all teams rowing together towards a common goal. If you have not heard about them, in the few posts I will explain consumer journeys in more detail.

Breaking silos

Today I am going to diverge from the typical, more technically-oriented posts I have written in the last few months. Most of the companies I have worked with in the last 5+ years had the same issue: different Adobe tools where used by different and disconnected teams. Although this seems like an obvious issue, I wanted to put it in writing.

Profile Merge Rules Configuration

The wait is over. If you have followed my last couple of posts, I have been explaining the steps before you can actually start configuring Profile Merge Rules. These steps are needed so, if you have landed on this post after a search, check them before proceeding with this one.

Declared IDs

In my previous post, I started explaining what profile merge rules are. If you were expecting that in today’s post I documented how to configure it, I am still not there yet. I still need another building block: the declared IDs. With it, I will be in a position to show you how to proceed with profile merge rules.

Introduction to Profile Merge Rules

One of the most difficult features to understand in Adobe Audience Manager is profile merge rules. I thought of diving directly into the configuration, but first I want to explain what problem profile merge rules is trying to solve it and how it does it. Then I can move on to the code and, finally, the configuration.

Override Last-Touch Channel

My initial goal, when I wrote about attribution, was actually to talk about the configuration option named “Override Last-Touch Channel” in the Marketing Channels reports. However, I realised I needed an introduction to make it clearer and I wrote my previous post. Now I can go into the details of this technical feature and its consequences.

Some Thoughts on Attribution

During the EMEA Summit 2019, one Adobe customer asked me about one detail of the Marketing Channels configuration. The conversation we then had around this question, reminded me of the confusions some managers tend to have about attribution. Let me clarify a few things about this topic.

Site Speed and Adobe Tools

In a recent project I worked on, the client set a team up to analyse the site speed of the website. This resulted in some clashes between them and the Adobe team. Both sides had their own arguments and it was difficult to progress. Today I want to give you my point of view and tips of what you can do if you find yourself in the same situation.

A4T and Server-side Digital Marketing

In a client-side implementation, the JavaScript code takes care of Analytics for Target (A4T), so you do not have to do anything. However and as usual, in any server-side or hybrid implementation of Analytics and Target, A4T requires some additional care.

Hybrid Analytics

The last tool I showed in my Summit lab was Adobe Analytics. Initially, I was not sure whether I should write about it. However, I have come up with some ideas to share and here you have the final post on this lab.

Hybrid Target - Part 2

With the Adobe Target server-side code code already step up, as I explained in part 1, we are now ready to move to the Adobe Target interface and configure it. I will show how to do it with an Experience Targeting activity, but it should work as well with an A/B test.

Hybrid Target - Part 1

The next step after you have a hybrid ECID implementation is to do the same with Target. I already wrote a post on how to create a pure Adobe Target server-side implementation. Now I will explain how to create a hybrid implementation. This post will show the code and the next one, the Target configuration.