Feed The Beast

18 Jun 2023 » Platform

You should understand by now the Real-Time Customer Profile and the Data Lake. However, these building blocks need data, a lot of data, to operate. Just to give you an example, I am currently working on an implementation that will host more than 150 million profiles. And this is not the biggest that we have seen in our customers. This why I call this post “Feed the beast”.

As usual and for reference, here you have the architecture diagram that I am following throughout this series of posts. Today I am focusing on the “Data Ingestion” building block.

Functionality

As you can imagine, the goal of this building block is to get data into the Adobe Experience Platform (AEP). Although this may seem like a straightforward action, it is not. There are a few steps that need to be taken:

- Get the data in a staging environment.

- Map the original data format into an XDM schema.

- Apply any data transformations.

- Check for any errors.

- Store the data in a dataset.

Obviously, this is an oversimplified list of steps. I am sure the actual implementation is more complex than that, but the extra functionality is not important for this post.

Finally, once the data is in a dataset, it will be stored in the Data Lake. Besides, if the dataset is enabled for profile, the data will also be forwarded to the Real-Time Customer Profile (RTCP). Remember that the fact that data is sent to the RTCP does not mean that anything will change in the profile.

Streaming connectors

One of the propositions of AEP is that it is real-time. However, it goes without saying that the slowest component in the chain will determine the overall speed. Therefore, if you want your new data to be acted upon in real-time, you need to send the data in real time.

To that effect, AEP offers streaming connectors, that will receive the data in real time:

- Datastreams. This is data coming from the edge network. I will talk more about it in a future post.

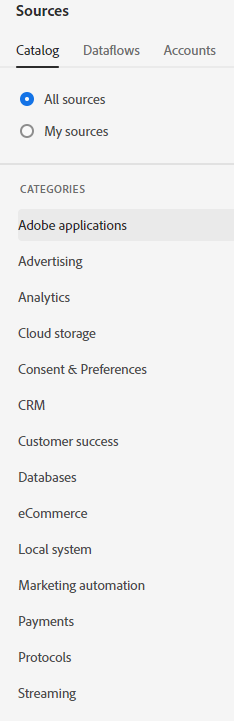

- Adobe and enterprise connectors. There are dozens of connectors that Adobe has created, which connect to services typically found in our clients. However, not all of them are streaming; check the description or your Adobe representative for this capability. Some connectors are a combination of real-time and batch.

- HTTP API. If none of the existing connectors supports your application, you can always create a basic HTTP inlet and send data to it from your systems. In this case, you will likely have to build a custom solution to send data to AEP.

Batch connectors

Not all data sources are real-time. For example, a database is typically batch. AEP also has a suite of batch connectors:

- Adobe and enterprise connectors. As I have explained above, you can connect to a variety of Adobe and enterprise sources, some streaming, some batch.

- Files. If you cannot use any of these connectors, you can always rely on plain, old files. AEP offers the Data Landing Zone, which is an Azure blob that comes with the AEP license, connectors to the most typical cloud storage solutions (AWS S3, Azure Blob, Google Cloud Storage…), and access to traditional storage protocols like SFTP.

Data Prep

AEP has the capability to modify the data it ingests on the fly before it stores it in a dataset. Although this functionality is meant for simple changes, the list of supported functions is quite large. Remember that the processing is applied one record at a time, with no access to previous records or the RTCP profile. If you need more complex transformations, you will need Data Distiller.

Before I move on to the next topic, a word of caution. I have noticed that Adobe Data Architects do not particularly like this functionality. They see it as a last resort, only to be used when the upstream system cannot apply the changes. This makes sense because if you use an ETL or SQL command, you should be able to apply all transformations there. I would recommend you follow their advice and keep the data prep functions to an absolute minimum.

Dataflows

The previous sections have described the components of the data ingestion process. To actually get the data in AEP, you must configure dataflows. These are the pipes that connect the source system with the dataset, which include the functionalities explained above:

- Authentication. You need to provide the credentials for the source connector to connect to an external system.

- Select data. You have to choose which tables, files, or other entities that contain the information that needs to be imported.

- Dataflow details. This is where you select the dataset, alerts, and other parameters.

- Mapping. Unless the data is already in XDM format, you need to map the input data into XDM fields. This is where you may apply the data prep functions.

- Scheduling. Only applicable to batch connectors.

Now that you have your dataflows up and running, you are finally getting data into AEP.

Batch ingestion API

There is one last option to get data into AEP: the batch ingestion API. While the batch connectors are pull (AEP initiates the connection and requests data), the batch ingestion API is push. In this case, you send the data directly to a dataset, bypassing the dataflow. The data needs to be in XDM format when you use this approach.