Bots and the Adobe Experience Cloud

26 Jan 2020 » MSA

Now that you are familiar with what a bot it is, I am going to explain how the Adobe Experience Cloud (AEC) interacts with bots. However, if you landed on this page directly and do not know what a bot is, I suggest you first read my previous post on bots, crawlers and spiders.

Adobe Analytics

This is the main place where you are going to see “things” about bots in the AEC. But before I get into the features, let me explain a few details of these web crawlers.

Bots and JavaScript

Depending on their JavaScript capabilities, we can classify bots in two types:

-

JavaScript blind. These used to be the majority a few years ago, but I do not know what is the current situation. In summary, these bots just download the HTML and, maybe, the referenced assets. However, they do not have a JavaScript engine to execute the JavaScript code. So, if you website relies heavily on this language (e.g. SPAs), these bots will not see what humans get in a browser. This may be a bad thing or a good thing, depending on whether the bot is friendly or unfriendly.

JavaScript blind. These used to be the majority a few years ago, but I do not know what is the current situation. In summary, these bots just download the HTML and, maybe, the referenced assets. However, they do not have a JavaScript engine to execute the JavaScript code. So, if you website relies heavily on this language (e.g. SPAs), these bots will not see what humans get in a browser. This may be a bad thing or a good thing, depending on whether the bot is friendly or unfriendly. - JavaScript capable. As you can imagine, these bots are much more sophisticated and behave like a proper browser, including the JavaScript engine. Beware, though, as some bots have bad JavaScript implementations and may not execute all JavaScript.

Why is this important? Well, if your Adobe Analytics implementation uses the AppMeasurement library, then you can ignore bots that do not have a JavaScript engine. As I said, they will only download the HTML, but will not be able to create the s object and call s.t(). Consequently, there will be no calls to Adobe Analytics collection servers.

On the other hand, JavaScript-enabled bots will generate Analytics calls. The only special case to consider is if you have implemented Adobe Analytics server-side, in which case you will always get the Analytics calls, irrespective of the type of bot.

Bot behaviour

The next thing to note is that bots are… bots! No, seriously. They are machines, not humans. When we, humans, browse a website:

- We wait until the page loads, including all the assets, but not always

- Read the contents

- Click somewhere on the page or abandon it

- Add things to the basket

- Log in

- Purchase or make any other sort of conversion

- Leave a comment

Well, I think you are now getting it. Bots cannot do many of the things in this list. They usually do not have an account, a credit card, an opinion… They just download some or all the pages, at high speed. In other words, human behaviour and bot behaviour are completely different.

Bots reports

Adobe Analytics is very well aware of the existence of bots. It would drive a web analyst crazy if all the data was combined. Therefore, the first thing Analytics does is to exclude all known bots from the reports. This means that the reports should, in theory, only include human behaviour.

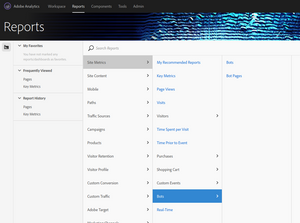

The second thing Analytics does is to create two special reports with bot behaviour, so that the data is not lost. These reports are only available in Reports & Analytics, not in Workspaces.

-

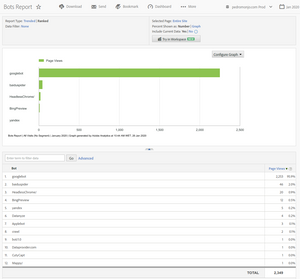

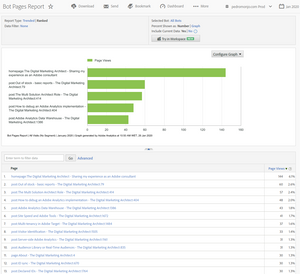

Bots. This report summarizes the number of “page views” for each robot.

-

Bot pages. Shows how many times each page has been “viewed” by a robot.

Some people have asked me why there are no more reports on bots. In reality, there is not much more you can do with them, other than count. As I said, bots are not human and any further analysis will be useless.

Bots configuration

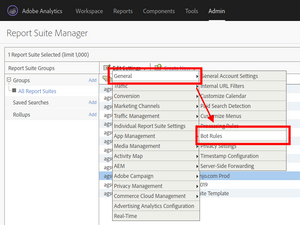

In the previous sub-section I referred to “known bots”, without getting into any details about it. You may be wondering how does Analytics know what is a robot and what is a human. Well, there is a configuration section precisely for this. You can find it in the Report Suite Manager, in the Admin section:

Which will take you to the following page:

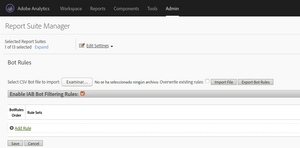

Unless you know what you are doing, you should always activate “Enable IAB Bot Filtering Rules”. As Thomas explained, this is a list of known, friendly bots that the IAB has collected and Adobe has licensed.

However, the IAB list is not always enough, especially with unfriendly or unknown robots. Therefore, the configuration page lets you create additional rules to filter additional robots. You can use a combination of User-Agent, individual IP addresses and IP ranges for these additional rules.

More information in the help section: https://docs.adobe.com/help/en/analytics/admin/admin-tools/bot-rules/bot-rules.html.

Adobe Experience Manager

The other main tool that will be affected by robots will be Adobe Experience Manager (AEM). You probably have already guessed that I am not an expert in this tool and I am not going to get into as many details as with Analytics. However, being AEM so complex and vast, even if I tried to write a text, it could also mean a long text of multiple posts.

Instead, I am going to give you some points to take into account:

- Web traffic. For obvious reasons, you will see an increment in the amount of traffic your AEM servers receive. In general, this amount should not be too big. Well-behaved robots are very conscious of that and try to minimise the traffic they generate. The problem comes with unfriendly robots, that could potentially bring down your website. To avoid this situation, your network engineers should have enough protections before AEM.

- SEO. When designing your website, the agency needs to be aware of SEO and how search engines will index the content. AEM has nothing specific to force you to create a good website for search engine crawlers. In fact, it does not make any sense, as the release cycle of AEM is way slower than the pace at which search engine rules change. The best solution is to have an SEO team to take care of these details. It goes without saying that you should be very careful with black-hat SEO.

- Robots.txt. AEM can be used to create your robots.txt file.

- Best practices. AEM has published a list of best practices: https://docs.adobe.com/content/help/en/experience-manager-65/managing/managing-further-reference/seo-and-url-management.html. I also recommend one of our partner’s blog: https://www.netcentric.biz/insights/2019/05/cms-seo.html.

Other Adobe tools

It is safe to say that you can ignore bots in all other Adobe tools. At least, I have never seen a customer adding anything special in Target, Audience Manager or Campaign.

Image by rawpixel.com