Enhancing the Security of your DTM Implementation

17 Jan 2016 » Launch

[UPDATE] This is an old post, which I keep for historical purposes. DTM is not longer available.

We are all aware of the importance of creating secure products. In a previous post, I explained how to set up a workflow for a DTM implementation. One of the consequences of using this workflow is that only a reduced number of users can cause damage to the website via DTM. This is also good from the security perspective, as it reduces the risks of a successful attack. This is probably enough for most companies.

Financial institutions and DTM

Having said that, we must also realise that it is virtually impossible to create a completely invulnerable software product and DTM is not an exception. Banks and similar companies take the security to the next level. For them, the minimum security level should be “paranoid”. For obvious reasons, this paranoid level of security also applies to DTM. If an attacker gained access to DTM, he could very easily inject malicious JavaScript code to the whole website, by just adding a page load rule.

There are many places in DTM where an attack could take place; this is what is called the attack surface:

- Use brute force to get to the password of one of the publishers or administrators.

- Find a vulnerability in the DTM UI and gain control of an account.

- Find a vulnerability in Akamai (current provider for DTM) and gain control of the servers.

I am not implying that DTM is insecure; I am 100% sure that the developers take security very seriously, but we must never forget that there can always be a hidden security hole.

Self-host DTM library

The immediate solution to reduce the attack surface is to self-host the DTM library. You would just use the DTM UI to create the rules and, once the development is finished, download the DTM JavaScript library. You can then run an audit of the files and host them in your own servers, which you have already hardened. With this approach, all three security concerns raised in the previous paragraph are gone. Even if an attacker gained access to the DTM, he could not modify the DTM library in your production servers.

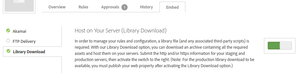

In order to do this, you first need to enable the “Library download” option, under the “Embed” tab.

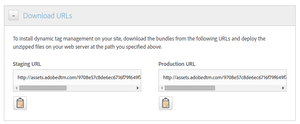

After configuring the options, you get a URL to download both the staging and production libraries:

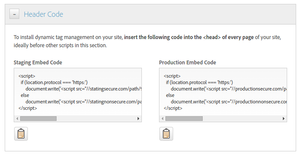

Remember to update the links to the library in the HTML, as specified in the “Header code” section:

DTM library workflow

The previous solution works, but it adds a level of complexity for the DTM users. When debugging unpublished rules, the DTM Switch is completely useless, as it only works if the library is hosted in Akamai. You need to use tools like Charles, which make the whole process more complicated: you might need a license, you will need to download the library with every rule change, you need to learn how to manually replace the live DTM files with your local version… One problem has been solved, but a new one has been created. In particular, non-technical people will find quite challenging this solution.

My proposal in this case is to use a hybrid solution:

- Use Akamai for the development environment

- Use self-hosting for the test and production environments

and a create new workflow:

- The report suites in DTM should always point to development and/or UAT values.

- Your friendly marketer should have access to a development server with a working version of the website, where DTM is loaded from Akamai URLs, as with the typical DTM deployment. In this environment, she can easily create and test new rules using the DTM Switch. She should only have user permissions in DTM. Any successful attack on DTM will be confined to the development environment.

- Once the rules have been finished, a tester should verify the correctness of them. If everything is correct, the tester should approve and publish the rules. This is different from the workflow I suggested in a previous post: now, the tester has both approve and publish privileges.

- An automated script should download regularly the production DTM library and deploy the JavaScript files in the SIT/UAT/staging environments.

- Security audits should regularly be made to these JavaScript files.

- When pushing the whole development environment to SIT/UAT/staging, another script or process should automatically modify the DTM links in the HTML, to point to the correct server (not Akamai). These links are those in the “Library Download” tab.

- Website testers should only see the approved and published rules and report any errors.

- In pre-production, the report suite ID of the DTM library should automatically be replaced with the production RSID.

- The production environment should be exactly the same as pre-production.

It is a complicated solution, but it combines both the easiness of the DTM Switch for development and the security of self-hosting.

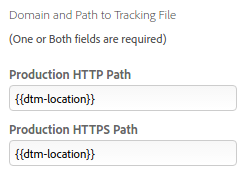

On final note. You will have noticed that the SIT/UAT/staging environment will have a slightly different version of the DTM library, than pre-production and production, as the RSID will be different. I would also expect that server names will be different. In this case, one solution is to replace the URLs in DTM with placeholders:

Your scripts I mention should also do a find and replace in the JavaScript files, looking for these placeholders and replacing them with the correct URL.